RKE2 Architecture & Design

This guide details the architectural decisions and patterns I use for production RKE2 deployments, with a focus on high availability, security, and edge computing requirements.

High-Level Architecture

I deploy RKE2 in two main architectural patterns depending on the use case:

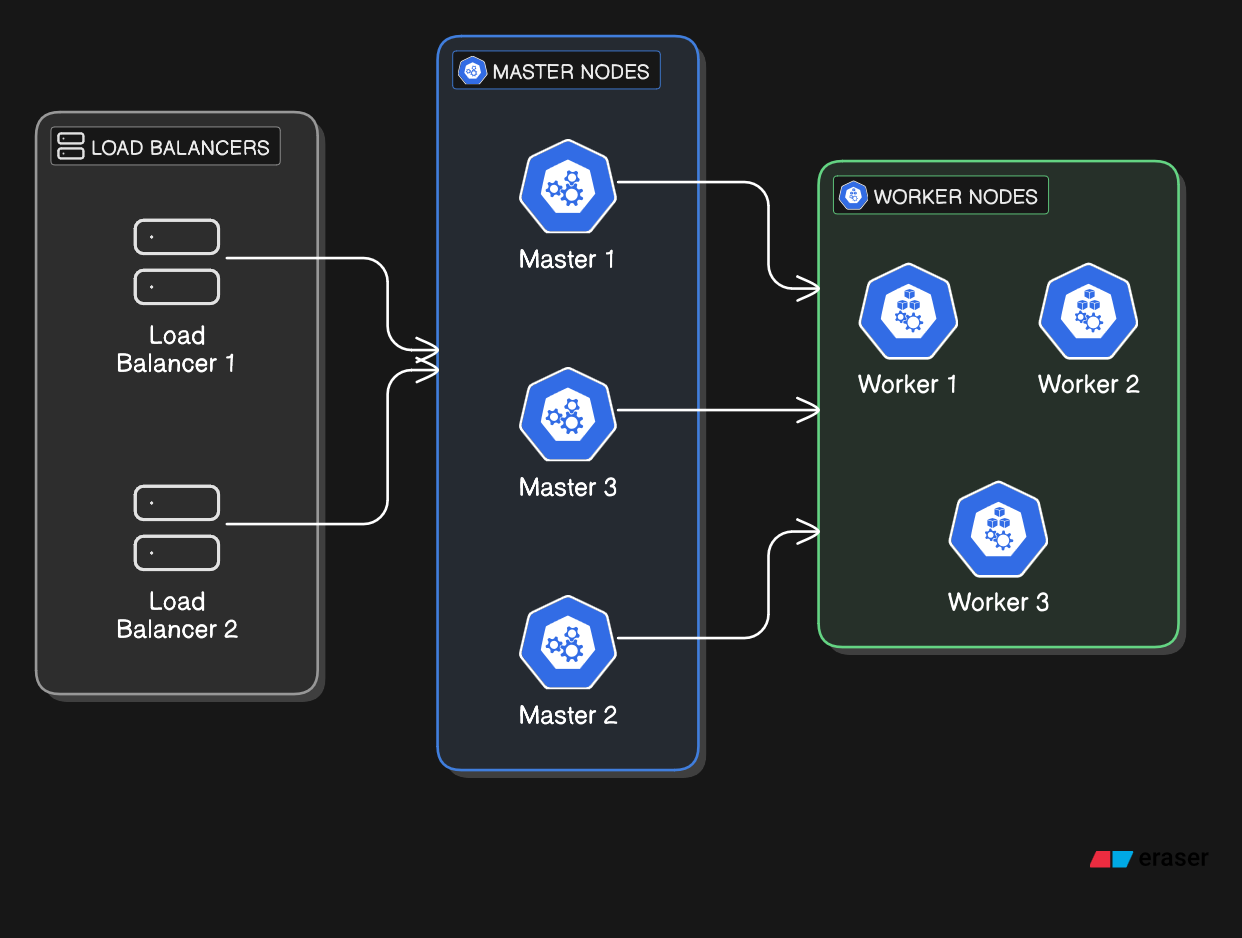

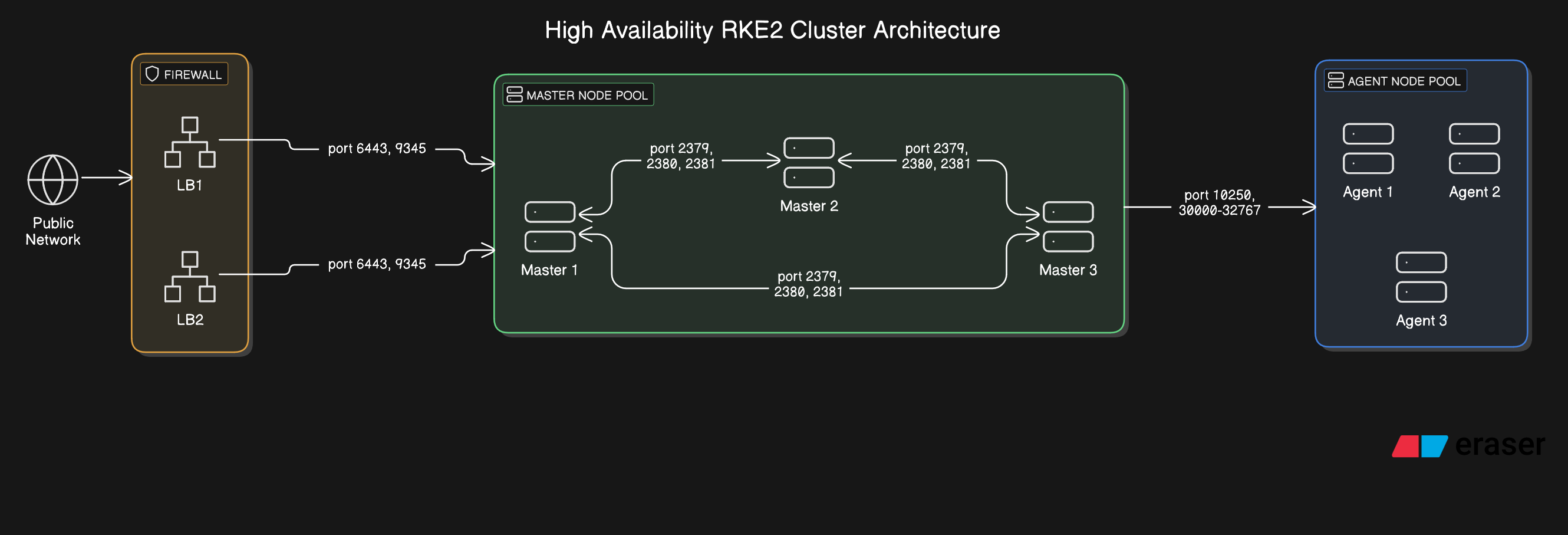

High Availability Architecture (Production/Datacenter)

For production environments, I use a 3-server, 3-agent pattern with external load balancing:

Edge Computing Architecture (Resource-Constrained)

For edge deployments, I use a 1-server, 2-agent pattern optimized for resource constraints:

Core Components

High Availability (HA) Pattern:

- Server Nodes (3x): Control plane with embedded etcd for quorum

- Agent Nodes (3+): Worker nodes for application workloads

- Load Balancers (2x): Layer 4 TCP load balancing for HA

- Cilium CNI: eBPF-powered networking and security

Edge Computing Pattern:

- Server Node (1x): Single control plane for resource efficiency

- Agent Nodes (2+): Minimal worker nodes for edge workloads

- No External LB: Direct access via VPN overlay (Tailscale)

- Cilium CNI: Same advanced networking in resource-constrained environment

Network Architecture

Port Matrix

| Port | Protocol | Source | Destination | Description |

|---|---|---|---|---|

| 6443 | TCP | Agents + LB | Servers | Kubernetes API Server |

| 9345 | TCP | Agents | Servers | RKE2 Supervisor API |

| 10250 | TCP | All Nodes | All Nodes | Kubelet API |

| 2379 | TCP | Servers | Servers | etcd Client Port |

| 2380 | TCP | Servers | Servers | etcd Peer Communication |

| 2381 | TCP | Servers | Servers | etcd Metrics |

| 30000-32767 | TCP | All Nodes | All Nodes | NodePort Service Range |

Cilium-Specific Networking

| Port | Protocol | Source | Destination | Description |

|---|---|---|---|---|

| 4240 | TCP | All Nodes | All Nodes | Cilium Health Check |

| 4244 | TCP | All Nodes | All Nodes | Cilium Hubble gRPC |

| 4245 | TCP | All Nodes | All Nodes | Cilium Hubble Relay |

| 8472 | UDP | All Nodes | All Nodes | VXLAN Overlay (when enabled) |

| 51871 | UDP | All Nodes | All Nodes | WireGuard (when enabled) |

Load Balancer Configuration

Layer 4 TCP Load Balancing

For high availability, I deploy two load balancers in active-passive configuration:

# Primary Load Balancer Configuration

upstream rke2-servers {

server 192.168.10.10:6443 max_fails=3 fail_timeout=5s;

server 192.168.10.11:6443 max_fails=3 fail_timeout=5s;

server 192.168.10.12:6443 max_fails=3 fail_timeout=5s;

}

server {

listen 6443;

proxy_pass rke2-servers;

proxy_timeout 10s;

proxy_connect_timeout 5s;

}

upstream rke2-supervisor {

server 192.168.10.10:9345;

server 192.168.10.11:9345;

server 192.168.10.12:9345;

}

server {

listen 9345;

proxy_pass rke2-supervisor;

}Health Check Configuration

# Health check endpoint

curl -k https://lb.example.com:6443/healthzNode Specifications

Production Hardware Requirements

| Component | Server Nodes | Agent Nodes | Notes |

|---|---|---|---|

| CPU | 4+ cores | 2+ cores | ARM64 or x86_64 |

| Memory | 8GB+ RAM | 4GB+ RAM | More for large clusters |

| Storage | 50GB+ SSD | 20GB+ SSD | etcd requires fast I/O |

| Network | 1Gbps+ | 1Gbps+ | Low latency preferred |

Example Node Layout

| Hostname | Internal IP | External IP | Role | Arch | OS |

|---|---|---|---|---|---|

| s1 | 192.168.10.10 | 100.64.1.10 | Server | x86_64 | Ubuntu 24.04 |

| s2 | 192.168.10.11 | 100.64.1.11 | Server | x86_64 | Ubuntu 24.04 |

| s3 | 192.168.10.12 | 100.64.1.12 | Server | x86_64 | Ubuntu 24.04 |

| a1 | 192.168.10.13 | 100.64.1.13 | Agent | ARM64 | Ubuntu 24.04 |

| a2 | 192.168.10.14 | 100.64.1.14 | Agent | ARM64 | Ubuntu 24.04 |

| a3 | 192.168.10.15 | 100.64.1.15 | Agent | ARM64 | Ubuntu 24.04 |

Cilium Architecture

eBPF-Native Networking

Cilium replaces kube-proxy entirely using eBPF for maximum performance:

# Cilium Configuration

cilium:

kubeProxyReplacement: true

bpf:

masquerade: true

hostServices:

enabled: true

hubble:

enabled: true

relay:

enabled: true

ui:

enabled: trueService Mesh Integration

Cilium provides L7 policies and observability without sidecars:

- Network Policies: eBPF-enforced microsegmentation

- Load Balancing: Direct packet steering without NAT

- Observability: Deep packet inspection via Hubble

- Encryption: Transparent WireGuard mesh

Edge Computing Adaptations

Bandwidth Optimization

- Image Caching: Local registry mirrors for reduced bandwidth

- Compression: Enable gzip compression for API communication

- Traffic Shaping: Prioritize control plane traffic

Intermittent Connectivity

- Leader Election: Increased timeout values for unstable networks

- Heartbeat Tuning: Adjusted kubelet and etcd intervals

- Local Storage: Persistent volumes on local SSDs

Remote Access Pattern

For edge deployments, I integrate Tailscale for secure remote management:

# Node Configuration with Tailscale

node-ip: 192.168.10.10 # Internal LAN IP

node-external-ip: 100.64.1.10 # Tailscale overlay IP

tls-san:

- "192.168.10.10" # Internal access

- "100.64.1.10" # Remote access via Tailscale

- "lb.edge.example.com" # Load balancer FQDNSecurity Architecture

Certificate Management

- Automatic Rotation: 90-day certificate lifecycle

- SAN Configuration: Multi-domain certificate support

- CA Distribution: Secure CA certificate distribution

Network Segmentation

# Cilium Network Policy Example

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: edge-cluster-isolation

spec:

endpointSelector:

matchLabels:

env: production

ingress:

- fromEndpoints:

- matchLabels:

env: production

egress:

- toEndpoints:

- matchLabels:

env: productionMonitoring & Observability

Hubble Integration

Cilium's Hubble provides comprehensive network observability:

- Flow Monitoring: Real-time network flow visualization

- Security Events: Policy violation alerts

- Performance Metrics: Latency and throughput analytics

- Troubleshooting: Deep packet inspection capabilities

This architecture provides a robust foundation for production RKE2 deployments with enterprise-grade security, performance, and observability.